Partial AI Explanations

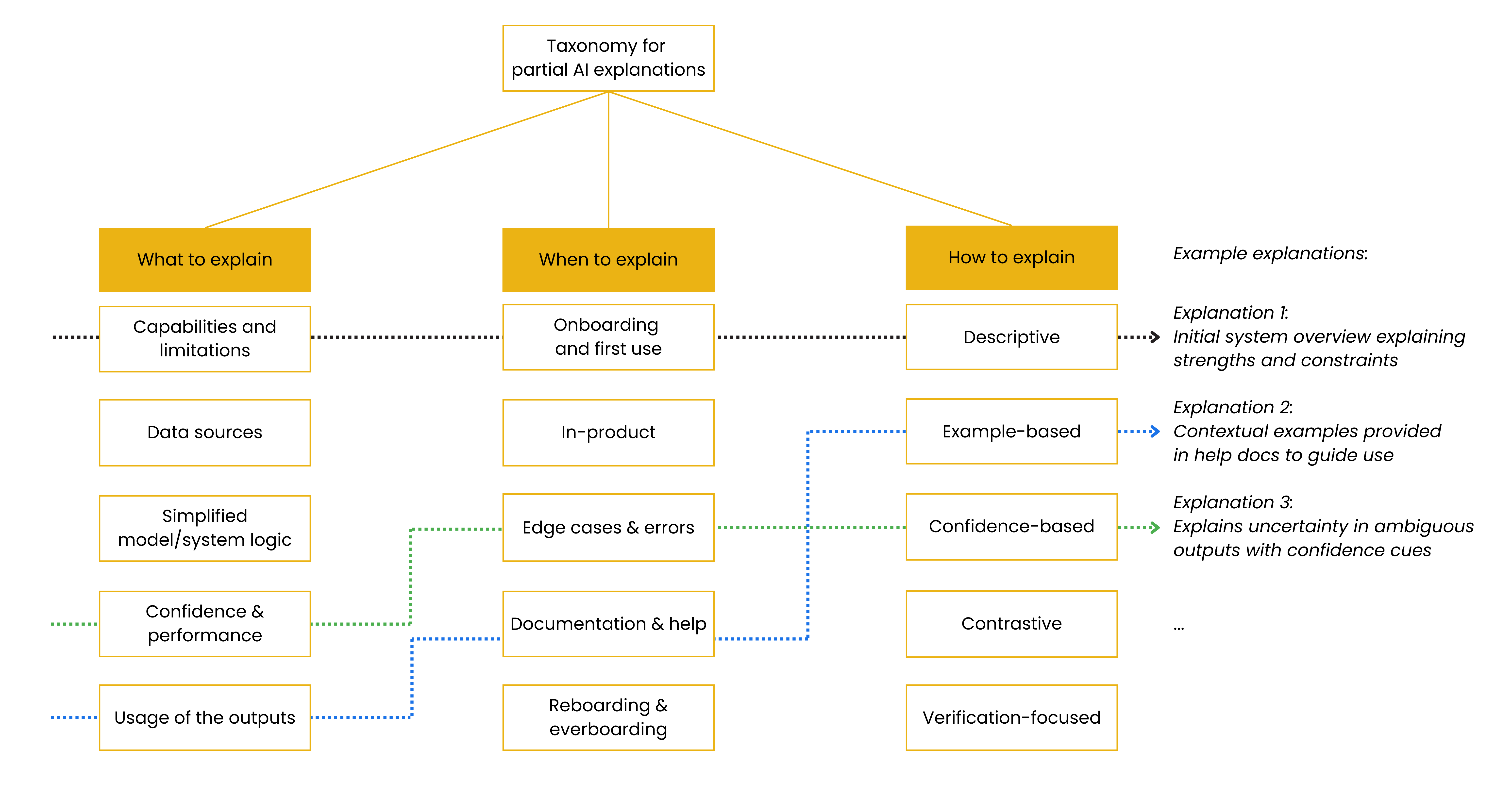

This mental model helps product teams craft targeted, contextual AI explanations by combining three key dimensions: what the explanation should cover, how it should be presented, and when in the user journey it should appear. Rather than prescribing a single explanation strategy, the taxonomy encourages teams to consider all three dimensions for each user-facing feature, enabling them to choose the right level of transparency and support.

Principles

Design explanations with intention across three dimensions: what, how, and when.

No one-size-fits-all: adapt explanation depth and tone to user goals and context.

Support user understanding without overwhelming or overpromising.

Use examples to align with mental models and support trust calibration.

Transparency is useful when it is actionable and well-timed.

Implementation steps

1Choose what to explain

Select the conceptual focus of your explanation. Options include: capabilities & limitations, data sources, simplified model logic, performance/confidence, or how to act on outputs.

2Choose how to explain it

Pick a format that aligns with user needs and complexity. You can use example-based, confidence-based, contrastive, interactive, or verification-focused styles.

3Choose when to explain it

Determine the right moment in the user journey: onboarding, in-product, error state, documentation/help, or everboarding (as the system evolves).

4Test with real users

Check whether your explanation is understandable, trustworthy, and helpful. Iterate based on qualitative feedback or analytics on user behavior.

Anti-patterns

Overloading: Explaining too much at once, especially in early onboarding flows.

Omitting critical guidance: Skipping confidence cues or fallback suggestions in high-risk moments.

One-size-fits-all: Using the same explanation across audiences and contexts.

Opaque AI behavior: Providing no way for users to understand or correct confusing outputs.

Purely technical language: Explaining model internals without helping users act on it.