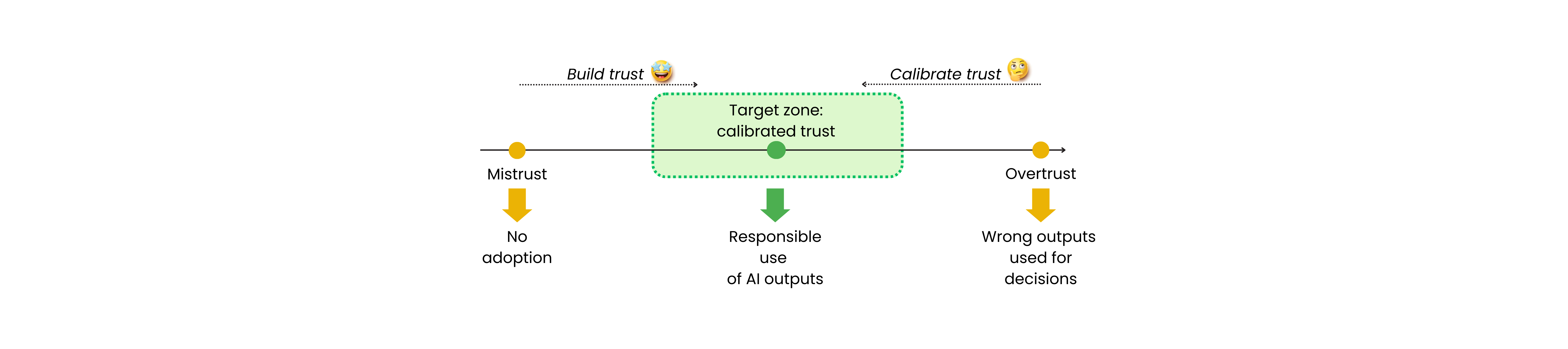

Trust Scale

Trust is a crucial factor to neutralize the uncertainty and failure risks of AI. The Trust Scale is a mental model for intentionally designing and managing user trust. It shows a scale between the two extremes of mistrust and overtrust. You need to avoid getting stuck in these extremes, and instead pull your users towards the "golden middle" of calibrated trust. Here, they will benefit from your system while using its outputs responsibly and mitigating potential risks.

Principles

Trust must be earned, calibrated, and adapted over time

Transparency about capabilities and limitations is essential

User trust is shaped more by UX and communication than by model accuracy alone

Overtrust can be as dangerous as mistrust

Critical use cases require stricter trust calibration

Implementation steps

1Define trust metrics

Decide how you will measure user trust. You can use explicit data (like user tests and surveys) and implicit signals (such as how often users verify AI outputs or override system suggestions).

2Set trust targets

Establish clear goals for desired trust levels based on the use case criticality. Define acceptable levels of skepticism, reliance, and verification behaviors.

3Design trust calibration strategies

Specify how you will build and calibrate trust: transparency, user control, model performance improvements, explainability, co-creation, and other techniques (cf. this article). Combine multiple methods to create a robust trust strategy.

4Implement and measure

Deploy trust-building mechanisms and monitor trust metrics over time. Adjust designs, messaging, and system behavior based on user responses and feedback loops.

5Manage early experiences carefully

Focus on delivering visible, reliable value early in the user journey. Early experiences have a disproportionate impact on long-term trust. Educate users proactively and guide them through safe interactions with the AI system.

Anti-patterns

Black-box deployment: Launching AI systems without explaining how they work

Offloading trust to engineers: Trust is not just a function of model accuracy. It's built through UX, communication, and user education

Overselling capabilities: Setting unrealistic expectations about what the AI can do, leading to overtrust

Ignoring user feedback: Failing to incorporate user concerns and feedback into trust calibration strategies

One-size-fits-all trust: Applying the same trust-building approach to all users regardless of their technical literacy or risk tolerance

Resources

Article: Building and Calibrating Trust in AI