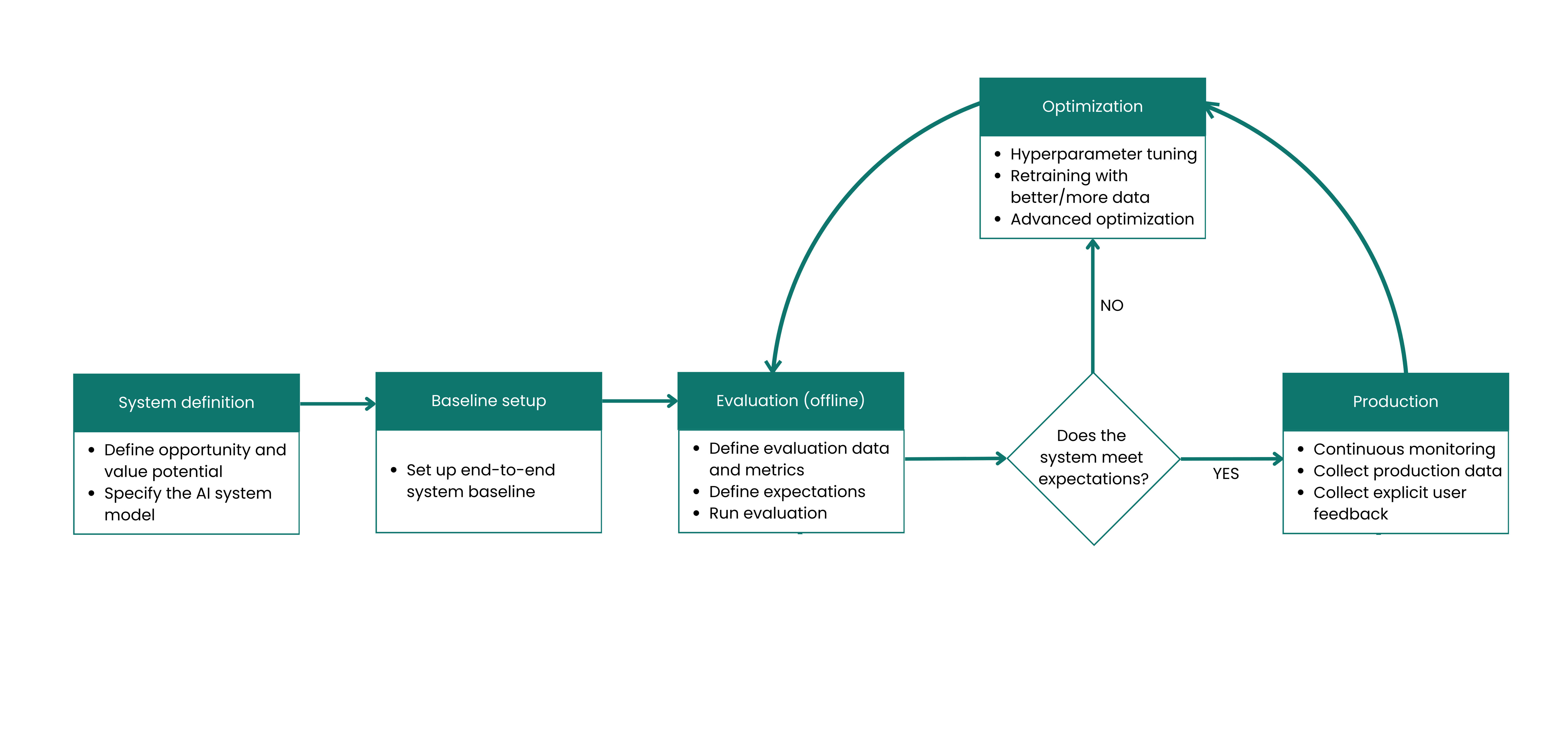

Iterative Development Process

The Iterative Development Process for AI emphasizes rapid cycles of experimentation, evaluation, and refinement rather than attempting to build perfect systems from the start. This approach acknowledges the inherent uncertainty in AI development and prioritizes learning and adaptation.

Principles

Start with simple models and baseline approaches

Establish clear metrics for success before building, and review them before iterations

Prioritize rapid experimentation over perfect implementation

Use real-world feedback to guide refinement

Implementation steps

1Define your baseline system

Identify the simplest version of your AI system that can deliver value and provide learning opportunities. Use the AI System Blueprint to ensure you included all necessary components.

2Establish your evaluation methodology

Define clear, measurable indicators of success before building. These should include both technical metrics (accuracy, latency) and business outcomes (user engagement, cost savings).

3Create rapid feedback loops

Design processes for quickly gathering and analyzing feedback from users, stakeholders, and system monitoring. Ensure feedback can be acted upon in the next iteration.

4Implement short development cycles

Structure development in short sprints (1-4 weeks) with clear goals for each cycle. Each iteration should deliver tangible improvements that can be evaluated against your success metrics.

5Monitor and evolve

Continuously monitor system performance in production and be prepared to update models as needed. Use insights from real-world usage to guide the focus of future iterations.

Anti-patterns

Perfectionism at the start: Spending excessive time trying to perfect a model before deployment instead of getting real-world feedback

Scope creep: Adding too many optimizations and tweaks per iteration, rather than focusing on the most effective changes

Ignoring user feedback: Focusing solely on technical metrics while disregarding qualitative user experiences

Slow iteration speed: Long iterations that slow down learning and adaptation

Resources

- How to Get User Feedback to Your AI Products - Fast!(Article by Andrew Ng)